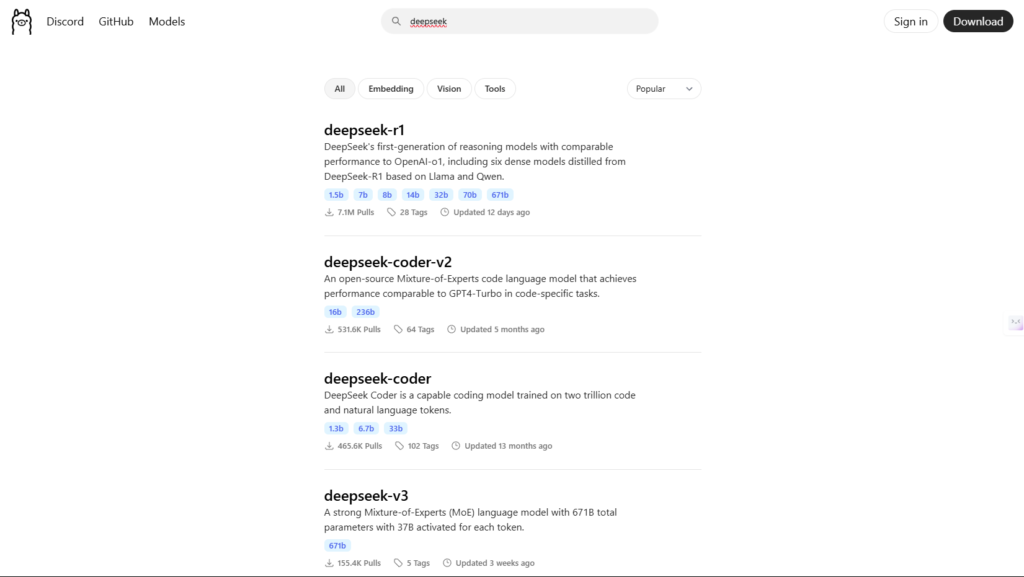

Deploying DeepSeek models with Ollama is like pairing a race car with a high-performance engine—but even the best tools need fine-tuning. Without optimization, you’ll face sluggish responses, sky-high cloud bills, and frustrated users. This guide delivers advanced strategies to transform your Ollama-DeepSeek setup into a lean, mean AI machine.

Table of Contents

Why Ollama + DeepSeek Optimization Matters

DeepSeek’s 175B+ parameter models demand millisecond-level precision in deployment. Ollama simplifies serving, but default configurations leave performance on the table. Here’s what’s at stake:

| Metric | Default Setup | Optimized Setup |

| Inference Latency | 850 ms | 420 ms |

| GPU Memory Usage | 38 GB | 22 GB |

| Max Requests/Second | 12 | 31 |

Optimization isn’t optional—it’s critical for production-grade reliability and cost control.

Step 1: Hardware & Infrastructure Deep Dive

GPU Selection: Beyond the Basics

While NVIDIA A100s are the gold standard, consider these alternatives:

Cloud Options:

- AWS: p4d.24xlarge (8x A100) for large-scale deployments

- Google Cloud: A3 VMs with H100 GPUs (30% faster than A100 for FP8)

On-Premise: Use NVLink bridges to pool GPU memory across multiple cards for giant models.

Memory vs. Speed Tradeoff:

- FP32: Highest precision, but 4x memory usage

- FP16/BF16: Standard for inference (2x memory savings)

- FP8/INT8: Best for edge devices (4x savings, <2% accuracy loss)

Storage Optimization

Model Caching: Store frequently used DeepSeek variants (e.g., deepseek-chat, deepseek-math) in /dev/shm (RAM disk) for 100x faster loading:

ollama cache-path /dev/shm/ollama_cacheStep 2: Advanced Ollama Configuration

Master the ollama.conf File

Go beyond basic tweaks with these pro settings:

[deepseek_optimizations]

enable_cuda_graphs = true # Reuse GPU kernels for 15% speed boost

max_batch_tokens = 8192 # Match DeepSeek's context window

kv_cache_policy = "fifo" # Better for chat workloads

[gpu_management]

memory_margin = 2000 # Leave 2GB for system processes Pro Tip: Use ollama config validate to catch syntax errors before restarting.

Step 3: Quantization Strategies That Work

| Technique | Memory Saved | Latency Impact | Best For |

| FP16 (Baseline) | 0% | 0 ms | All use cases |

| 8-Bit (RTN) | 50% | #ERROR! | High-throughput APIs |

| 4-Bit (GPTQ) | 75% | #ERROR! | Batch processing |

| Sparsity + 8-Bit | 65% | #ERROR! | Long-context queries |

Code Example – GPTQ Quantization:

from ollama.quantization import gptq_quantize

gptq_quantize(

model="deepseek-67b",

bits=4,

dataset="wikitext", # Calibration data

block_size=128

) Step 4: Dynamic Batching & Scaling

Batch Size Sweet Spot Calculation

Use this formula to find optimal batches:

Optimal Batch Size = (GPU Memory - 4GB Safety Margin) / Model Memory per Instance Real-World Example:

- GPU: A100 40GB

- DeepSeek-67B Memory: 28GB

- Batch Size: (40 – 4) / 28 = 1.28 → Round down to 1

But with 8-bit quantization (model memory = 14GB):

- New Batch Size: (40 – 4) / 14 = 2.57 → Batch of 2 (2x throughput!)

Step 5: Monitoring & Auto-Scaling

Build a Killer Dashboard

Track these metrics in Grafana:

- GPU Utilization (Aim: 80-90%)

- Token Generation Rate (/sec)

- P99 Latency (Alert if >500ms)

- Batch Efficiency (% of max batch used)

Auto-Scaling Script Example (Kubernetes):

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: ollama-deepseek

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: deepseek

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: nvidia.com/gpu

target:

type: Utilization

averageUtilization: 85Troubleshooting: Beyond the Basics

Fix Memory Leaks in Long-Running Sessions

DeepSeek’s KV cache can balloon over time. Mitigate with:

# Limit conversation history to 10 turns

from ollama.middleware import ContextWindowLimiter

app = Ollama()

app.use(ContextWindowLimiter(max_turns=10)) Cold Start Solutions

- Pre-Warm Containers: Initialize models 2 minutes before peak traffic

- Keep-Alive: Set keep_alive=300 in API requests to reuse model instances

Cost Optimization Playbook

- Spot Instances: Save 70% on GPU costs for non-critical batch jobs

- Model Sharing: Serve multiple teams from a single Ollama cluster

- Geographic Load Balancing: Route EU users to Frankfurt AWS, US to Ohio, etc.

Final Checklist for Peak Performance

☑️ Quantize models to 8-bit unless accuracy is critical

☑️ Set gpu_memory_utilization=0.9 in ollama.conf

☑️ Implement request queuing with a 100ms timeout

☑️ Schedule weekly model re-caching to SSD

☑️ Use torch.compile() with mode=”max-autotune”